Last update: 2025-06-22

Basic Wwise Concepts

Although Wwise official learning resources are actually very good, after some years teaching Wwise I've noticed that some students often find difficult to understand some terms and flows used in Wwise. This page aims to help students have a more visual understanding of all the basic Wwise concepts.

Index

What's middleware?

Maybe you've heard that Wwise is an audio middleware for video games and interactive applications. This definition is not really accurate. We need to better understand what a middleware really is before moving forward.

Middleware are software components that can be embedded into other software products to provide specific functionality. Nowadays, video games are usually built on top of software layers that provide the most commonly needed functions like 3D or 2D image rendering, physics calculations, user input handling, cinematic playing, AI processing and also sound output generation. That's what we call the video game engine.

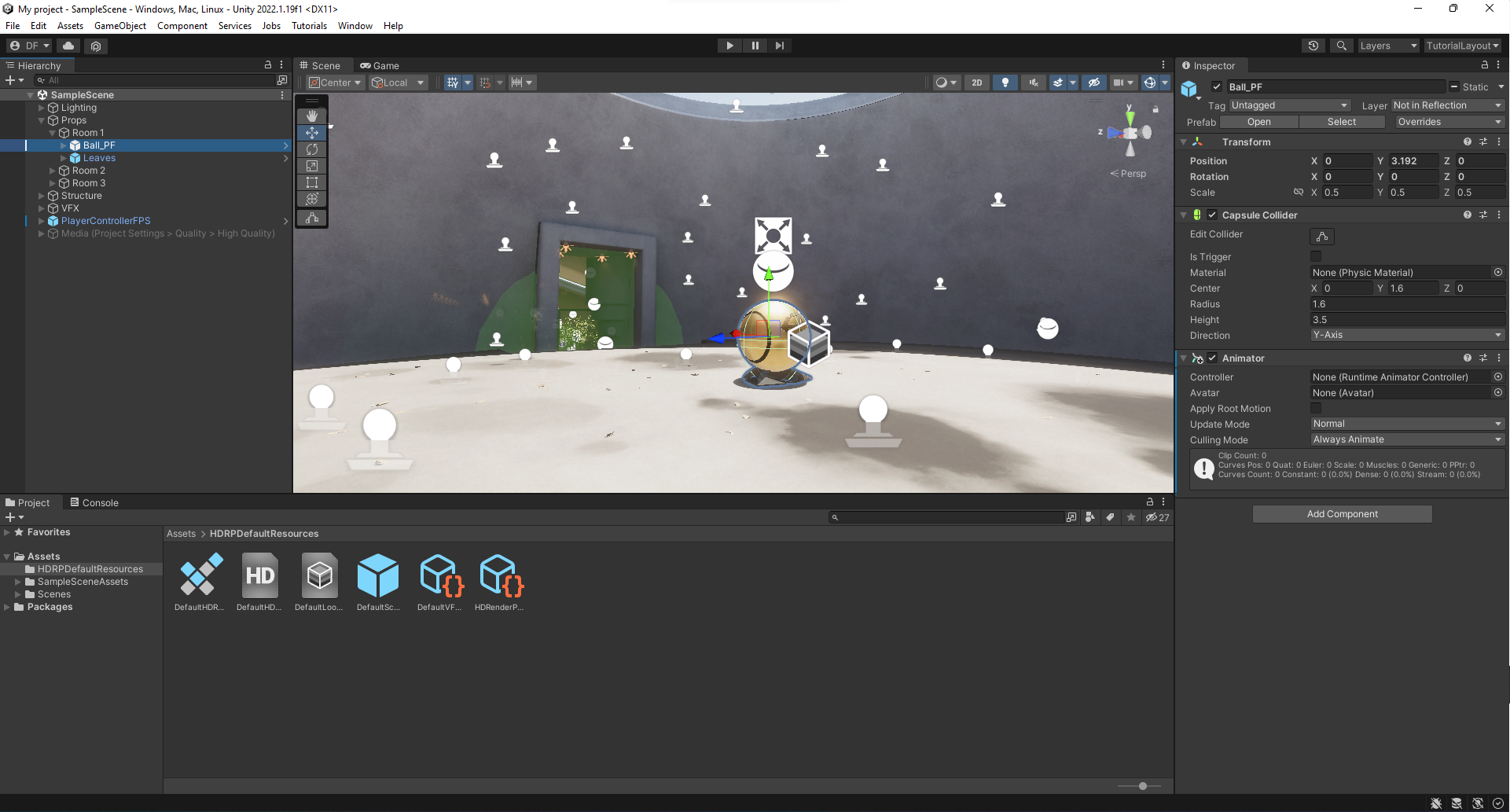

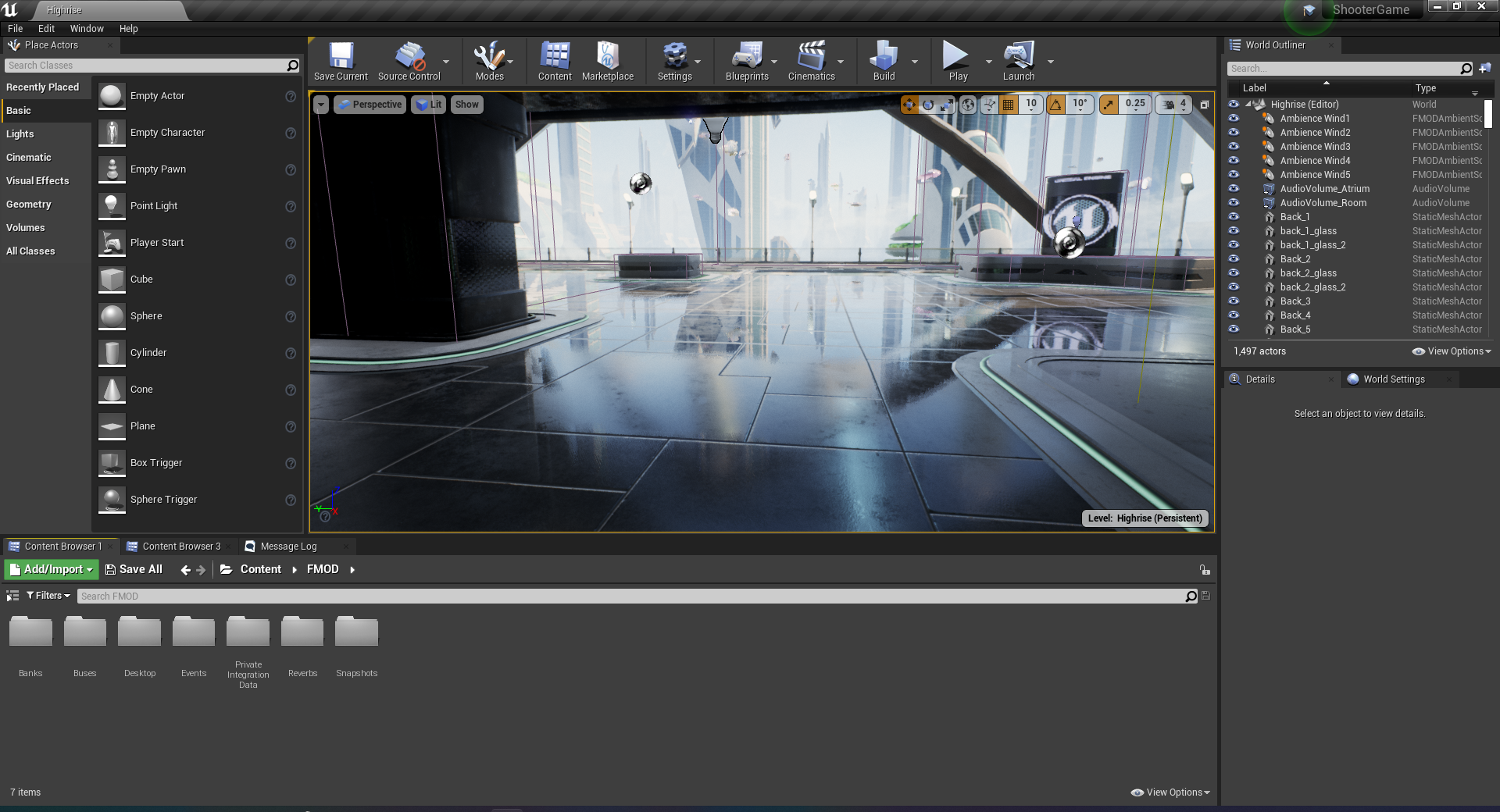

However, video game engines are not just middleware. They also feature specialized tools to help in the development process. All those tools are usually gathered together in specialized editors the allow the user to integrate all kind of assets like raw data, 3D models, animations, video, sound and code. Following this lines you can see screenshots of the Unity and Unreal editors, which are nowadays mainstream video game engines.

What's audio middleware?

Like all other types of middleware, Wwise is also a component that can be added to the video game engine to provided specialized audio functionality. It can deal with 3D interactive audio and music and allows a very accurate control to fine-tune sound behavior. Adding it to our video game engine, we'll have now the following layers:

Wwise lies in-between the video game engine and the sound output. Now the video game's sound engine is inhibited and the video game engine will sends to Wwise all the context data it needs to generate the sound output.

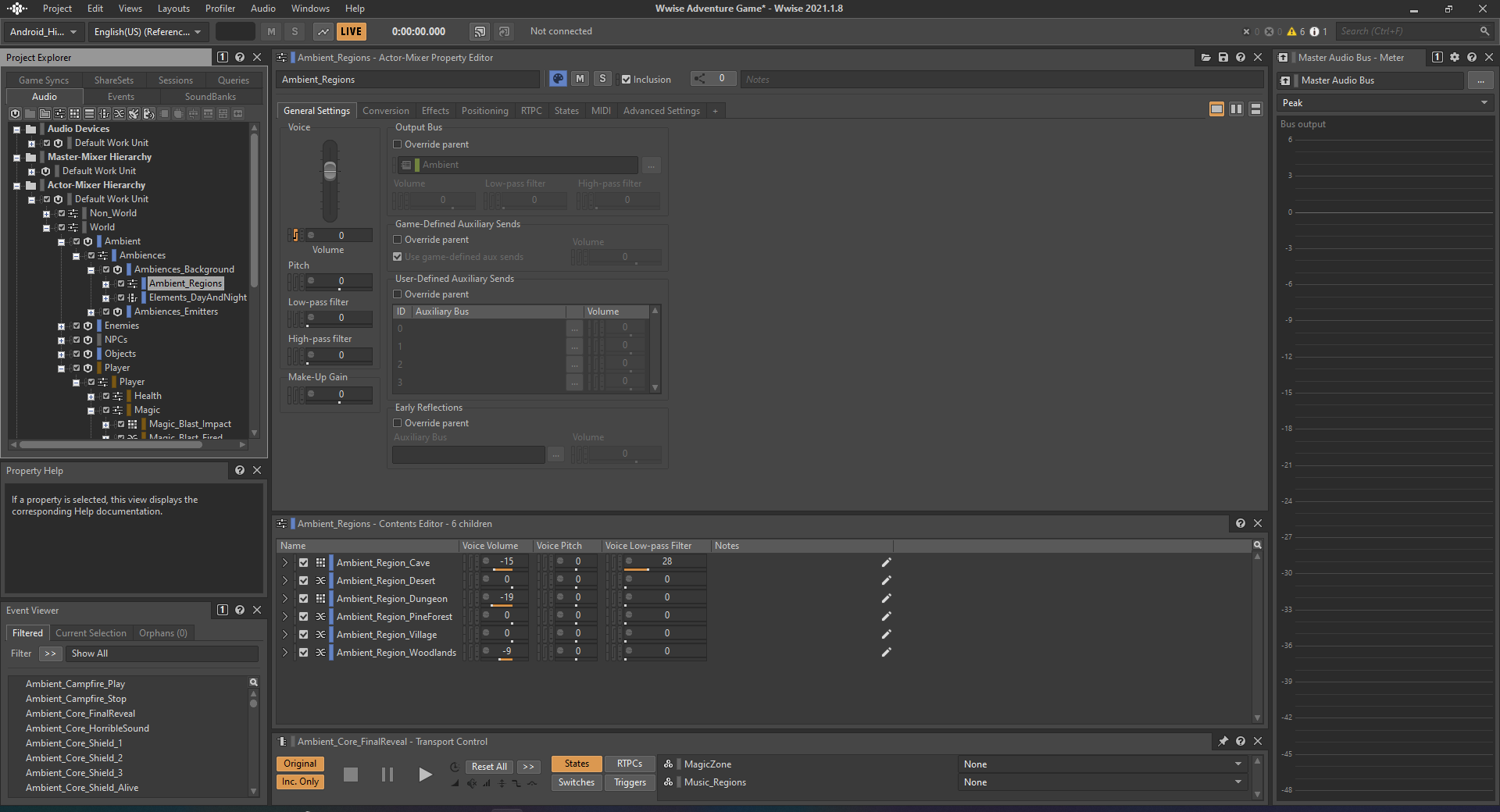

In the same way video game engines aren't just middleware, Wwise isn't just middleware either. It also provides a specialized tool to manage all the audio assets and define the way audio must react to game events and state/context changes: the Wwise Authoring Tool. This tool is designed to be used by sound designers. According to the Wwise workflow, the sound designer must define the audio behavior according to the game events and states, and also manage the assets (audio files). With this tool, the sound designer can generate specialized asset files called soundbanks that can be read and used by the Wwise middleware to play the sound for the game according to the designed behavior.

Other audio middlewares like FMOD work in the same way and provide similar tools to achieve the same goals, each one with its own special features.

Wwise Workflow: First Steps

The Wwise Authoring Tool is used to define how will sound behave according to the game events and state. The simplest interaction for understanding how everything works is intercepting an event and playing a sound whenever it happens. This is what is done in Wwise 101 first lesson.

In this lesson, we want our sample game, Cube, to play the sound IceGem_Blast.wav whenever the player fires the IceGem weapon. To achieve this, we'll use the Wwise Authoring Tool to intercept the Fire_IceGem_Player event in the game, associate the action of playing the IceGem_Blast.wav sound with it and generate a Soundbank that will contain both the sound and the behavior. The steps are simple:

- Create the Event in the Events Work Unit -name is crucial here-

- Import the audio file into a Sound SFX in the Actor-Mixer Hierarchy Default Work Unit

- Add a Play Action to the Event's Action List to instruct Wwise to play the Sound SFX

- Create the Soundbank needed by the game, called Main -name is also crucial here-

- Add the Event into the Soundbank

- Set up the soundbank location in User Settings and generate it

Now we can play the game (we must restart it, as soundbanks are read when the game starts) and listen how Cube is playing the sound we've set up whenever the event is posted. This is a diagram for the flow (the left side is performed in real-time):

A step-by-step explanation about the real-time flow:

- Wwise is initialized

- The Main soundbank is loaded

- During the gameplay, the player fires an Ice Gem

- The game issues a Fire_IceGem_Player game call to post the Event with the same name to Wwise

- Wwise finds in the Main soundbank the action list for the Fire_IceGem_Player Event which contains the Play IceGem_Blast Action

- Wwise plays the IceGem_Blast Sound SFX